[BEHIND THE SCENES EXPLANATION IN ENGLISH]

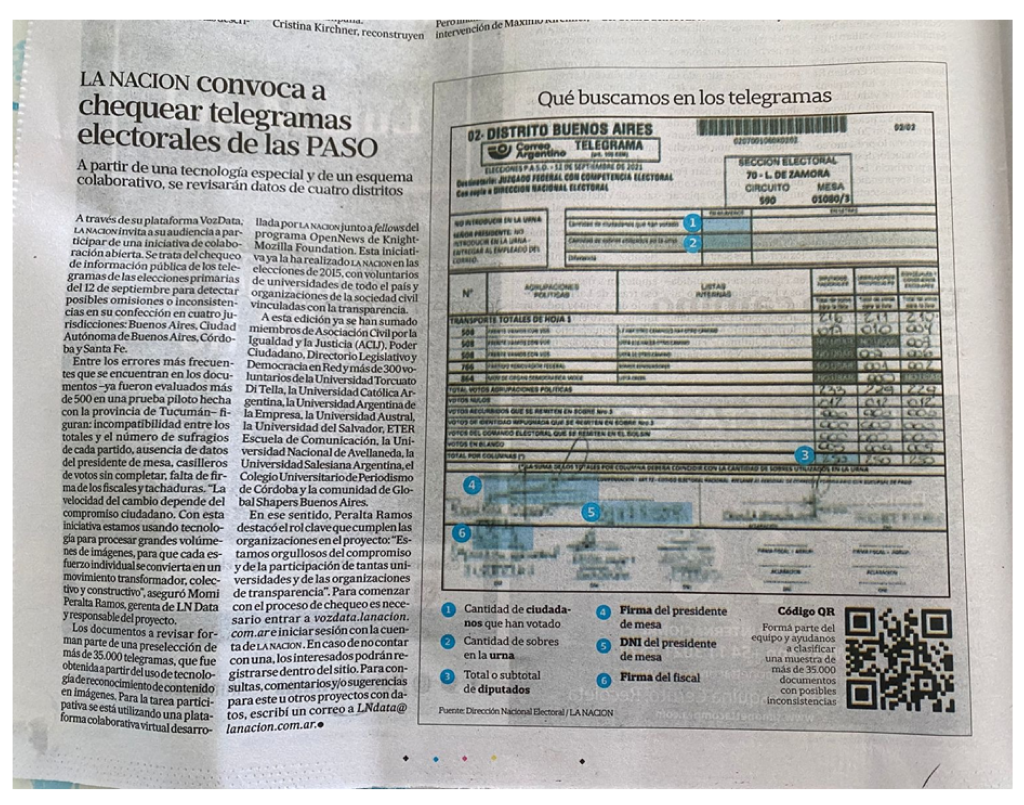

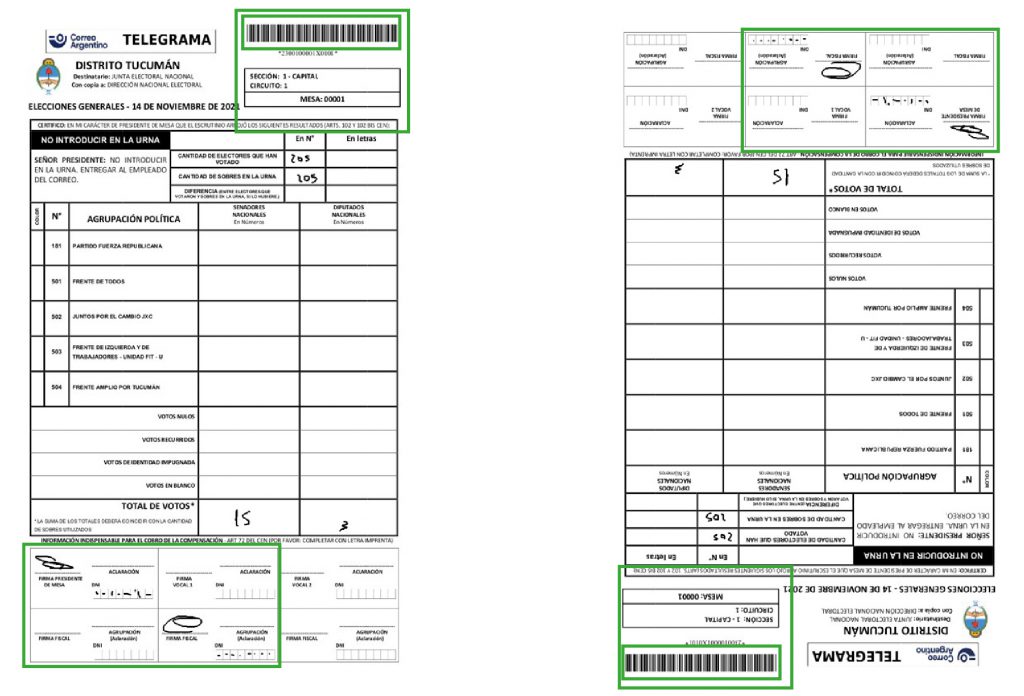

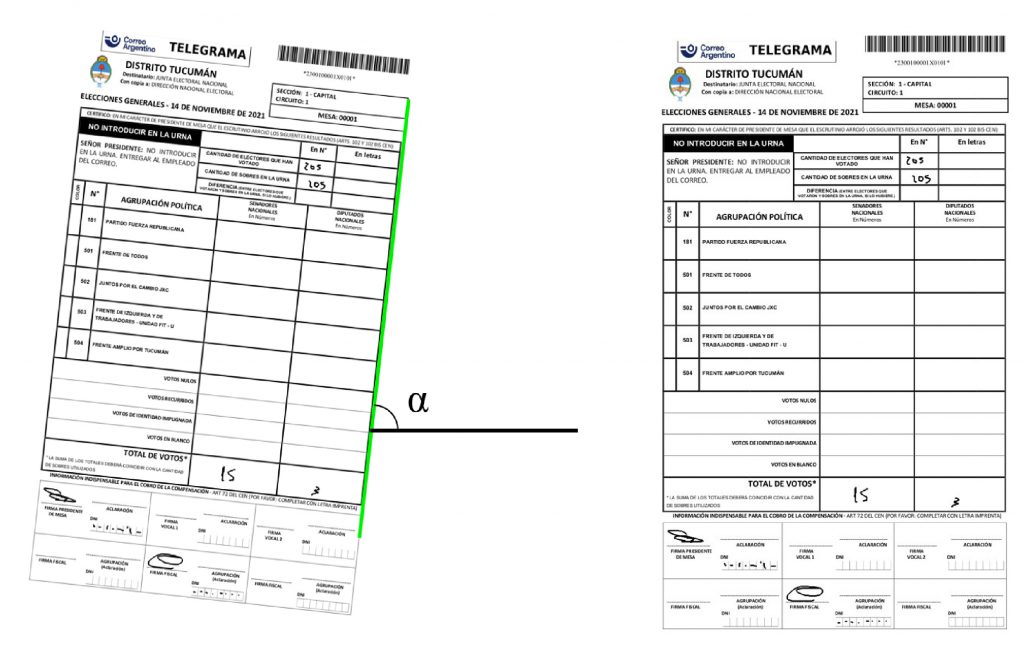

The purpose of the project was to monitor electoral documents with computer vision and then validate data using crowdsourcing. To detect blank spaces in those forms filled out by the chief officers of each voting station ‒fundamental data to confirm the suitability of the electoral process and avoid possible fraudulent situations.

In Argentina, there are numerous political party ballots and the counting of votes is manually performed.

The project involved data collection and several analyses shed some light on facts of public interest that would otherwise not be previously known without the help of state-of-the-art technology.

IMPACT

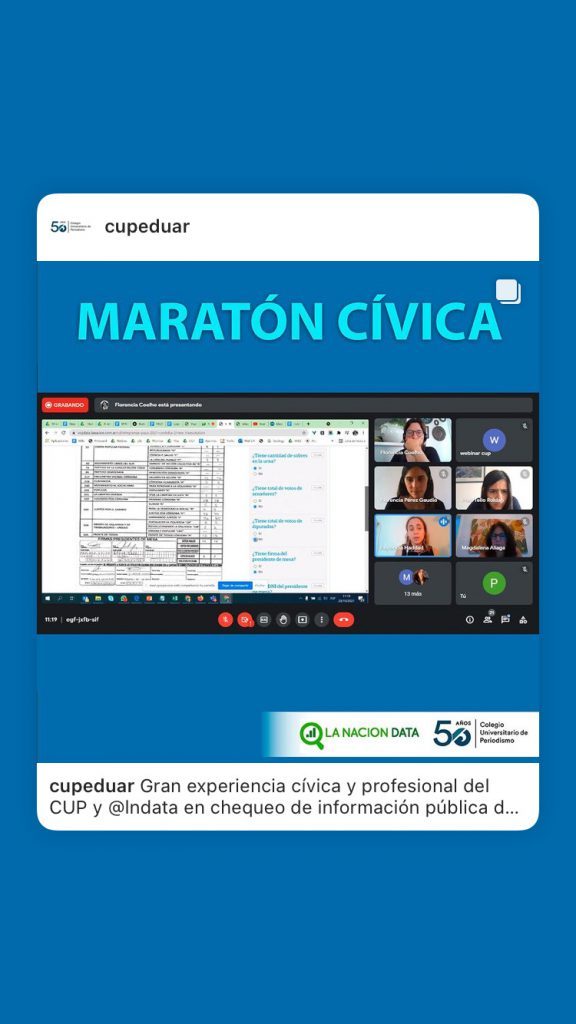

We were able to bring together more than 300 volunteers in 13 teams.

Volunteers were aware that we were validating previously collected information using an image recognition software and we worked with a software consultant.

Four transparency ONGs, ten universities and Global Shapers Argentina participated in this project.

As regards our telegram monitoring project in 2015, thanks to Computer Vision we could review 100,000 documents in 2021 instead of 16,000.

And with respect of general public, in terms of empowering communities to discover important information for themselves, we increased participation from 7 organizations in 2015 to 13 in 2021.

With the feedback received from volunteers, we found defects that affected the algorithm performance of documents in primary elections and we could fine-tune for general elections. [More information below]

We published a news article with the findings in the primary elections, this alerted the supervisors of elections and to the chief officer of the voting station of those aspects to be considered to improve the information output in the general election.

We did not find any fraudulent situation during the election, but we did point out political parties and their electoral representatives that we were a group of journalists, students and non-government organizations working with artificial intelligence technology. We are watching with ‘super-powers’.

TOOLS & TECHNIQUES

The project had different components: Artificial Intelligence, automation (RPA: Robots Process Automation) and visualization.

For data collection we used .Net with CSharp y Selenium (headless browser), the National Electoral Court API; for automation, UiPath, for algorithm design, Python + OpenCV.

For the analysis of results and dashboards, we used PowerBI, Excel and Google Sheets.

For data validation we used Vozdata, a collaborative online platform.

How did we use them?

The RPA automation worked with the following steps: 1) getting the telegrams, 2) algorithm execution, 3) results extraction, 4) sheet generation. And this started again for each selected district.

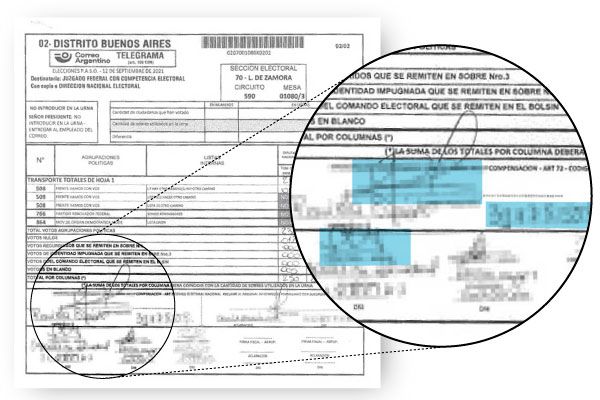

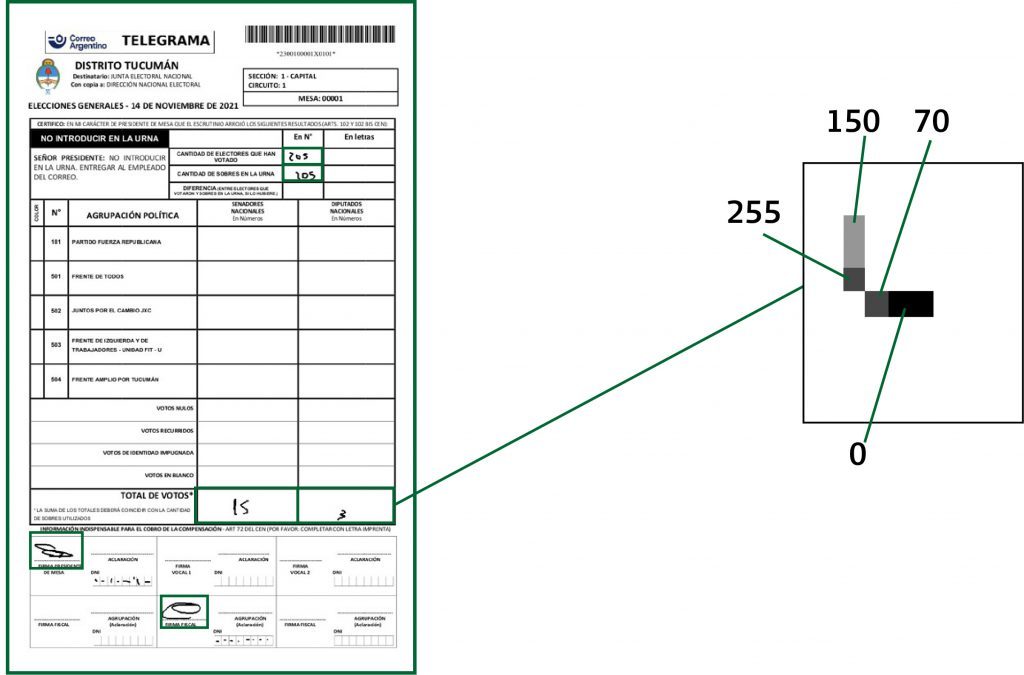

Some highlights of the algorithm design included: rejecting the use of regular services that detect and digitize tables and use, instead, a solution that used the general rectangular view of the Telegram as reference. This was adapted to each one of the districts analyzed as each province has a different Telegram design. Each field revised was measured considering height and width in pixels, moving the coordinates closer to the top left angle.

We also converted pixels from the RGB (Red, Green, Blue) model to a gray scale. As a result, we obtained a unique value that only varied its intensity from black to white.

We also used an algorithm to soften the drawings of a signature to avoid false positives of possible missing signatures when the ink used was not dark enough for a definition.

After getting the information from the algorithm we made queries in Google Sheets. A function that works with a formula that lets you filter predefined circumstances.

Select columnas A B C D where J=1 o K=1 o Z=1

If one of the situations appeared, the query filtered it.

With the selected telegrams we uploaded them to Vozdata in different folders as electoral districts.

THE HARDEST PARTS OF THE PROJECT

Data Download

The same Primaries election night, within 24 hours we tried to download the telegrams navigating the website with an application made in house. As this took 7 to 8 seconds per telegram we changed to use the Election National Chamber API which reduced the time to 2 seconds per telegram.

It was found that, when the files were downloaded with the APIs, the telegram file was a jpg file that in the case of the Province of Buenos Aires included two telegram pages in a single file.

As regards the tests carried out in the Province of Buenos Aires, we could not see the second page of the jpg file. So, the jpg file had to be adapted and converted into a .tiff file by dividing the .tiff file into the existing number of pages and keeping each one of them as a jpg file.

We downloaded data from the rest of the provinces with the second method (API). If there was more than one page, the application converted them into two or four separate pages. That was automatically done by the application. Thanks to said evaluation, they found what happened to the jpg files.

Algorithm performance

With the insights highlighted by the participants, we could adjust the algorithm for the General Election. The most important consisted in the detection that some telegrams were scanned upside down. So, the algorithm couldn’t work properly to detect the selected areas with empty boxes. We fine tuned it to look for code bars in the top right and the bottom left, and if it was the case, put the Telegram in the right vertical position to let the image recognition do its process. If the application did not find any of them, the telegram was incomplete. Some had not any barcode.

LEARNINGS

– AI experimentation takes time and resources. Collaboration is a great shortcut.

– AI is stats on steroids. There is always a percentage of error and human validation is essential to make it work for reporting.

– Crowdsourcing is easier when the common goal is vital to citizenship, like national elections with suspicion of fraud or very tight margins to change the results.

– We could reuse Vozdata because we didn’t need a specific annotation tool for validating the data brought by the algorithm for this project.

– Vozdata code is open source! github.com/crowdata/crowdata

– When sending signals to the official Government and other political parties, the message that “We are watching” is stronger when you can show an alliance with different transparency NGOs and a diverse group of universities participating on the project.

– Also, the message that your newsroom is using artificial intelligence to monitor processes is also powerful.